Lin Ai

I am a Senior Applied Scientist at Microsoft. I am a part of the IDEAS Research group led by Scott Counts.

I received my Ph.D. in Computer Science from SpeechLab at Columbia University, advised by Prof. Julia Hirschberg. I hold a Master of Science in Computer Science from Columbia University and a Bachelor of Mathematics, with majors in Computer Science, Statistics, and Actuarial Science, from the University of Waterloo.

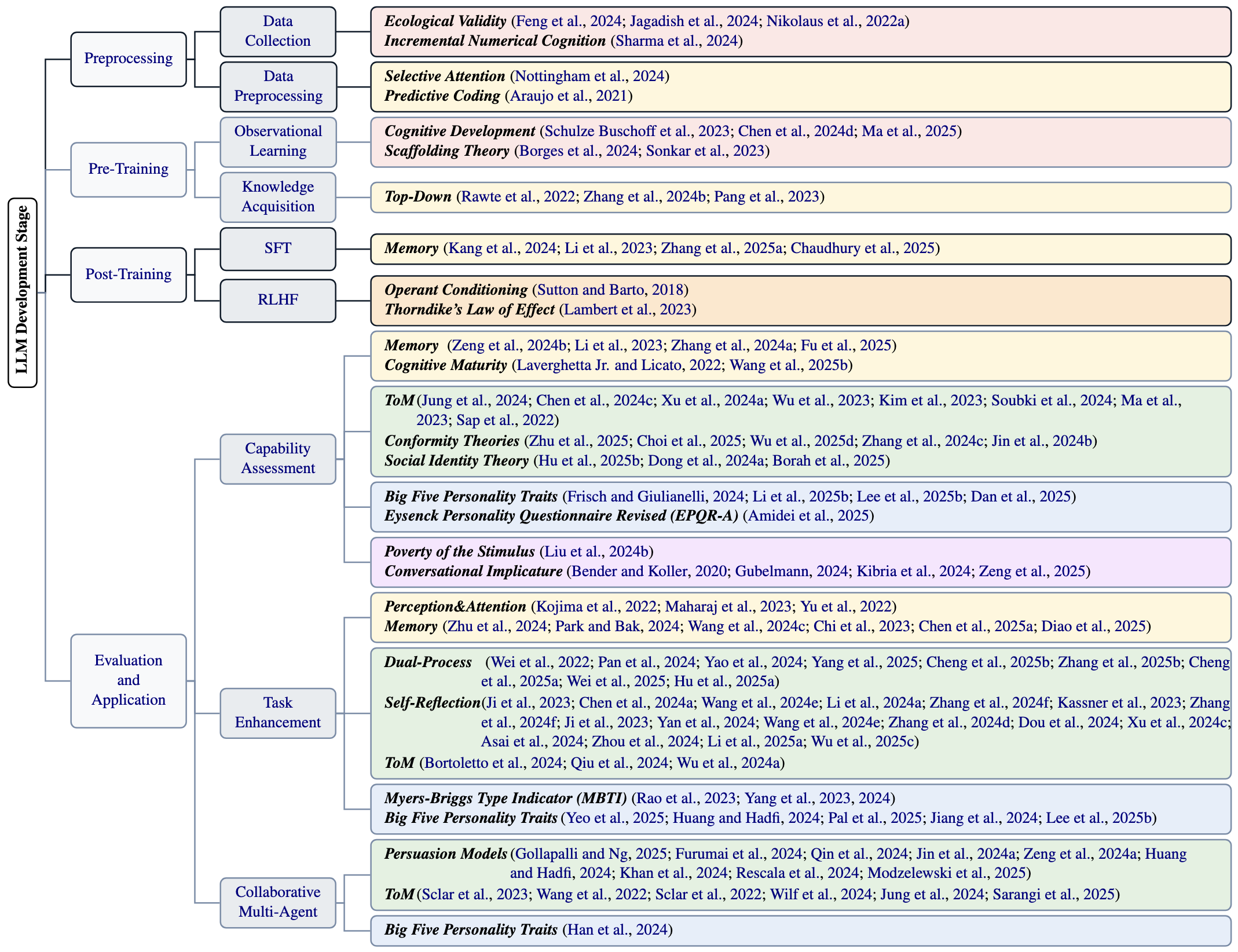

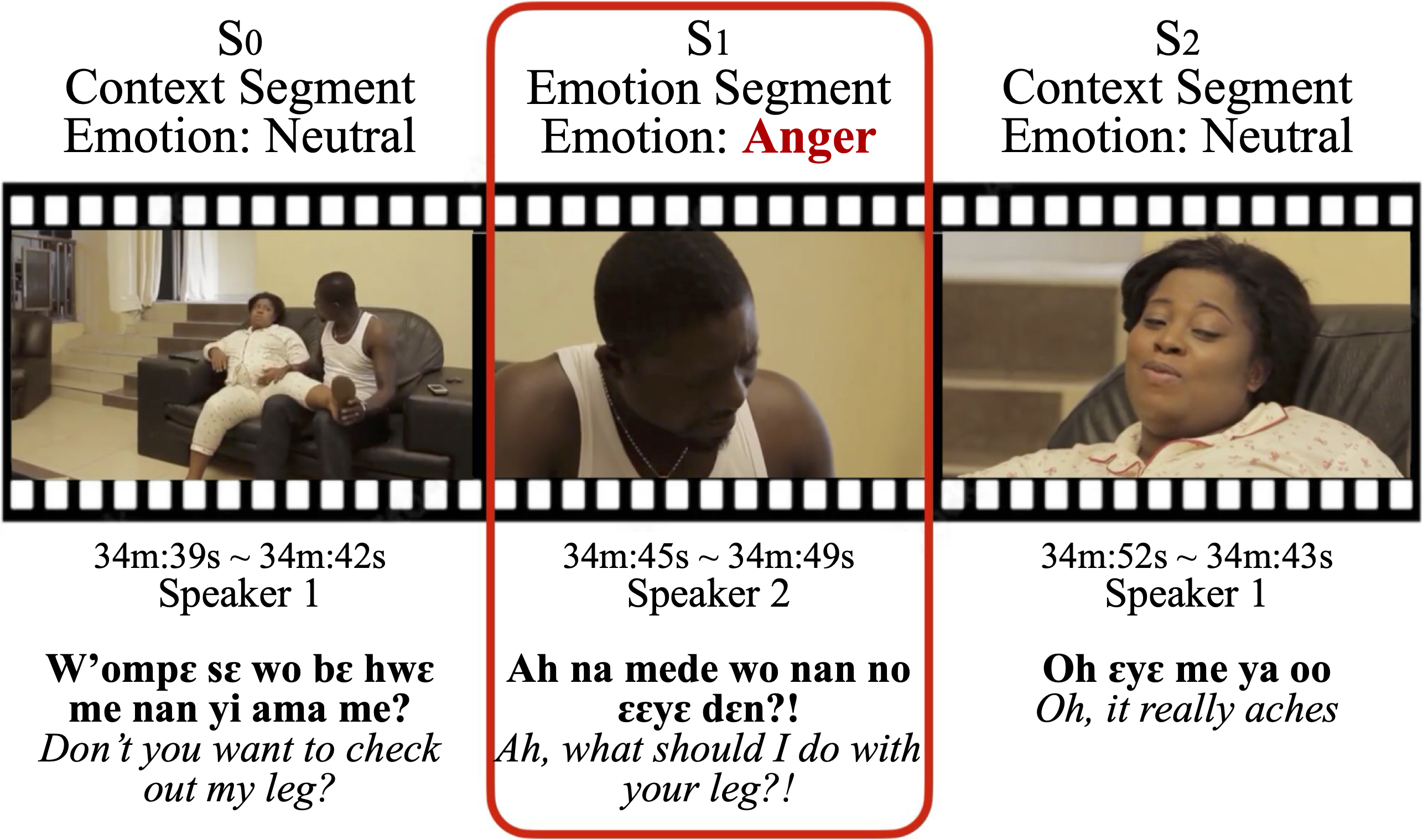

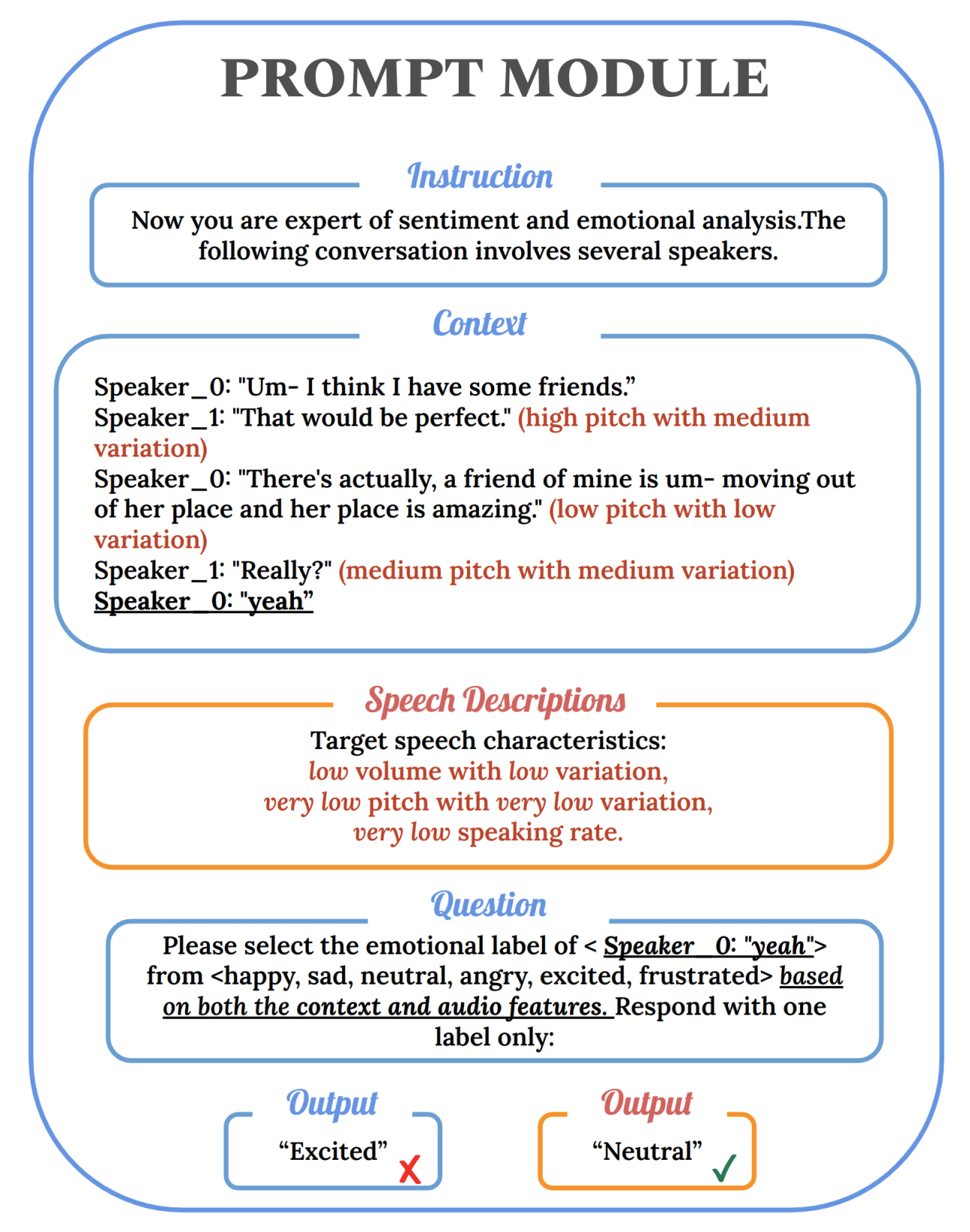

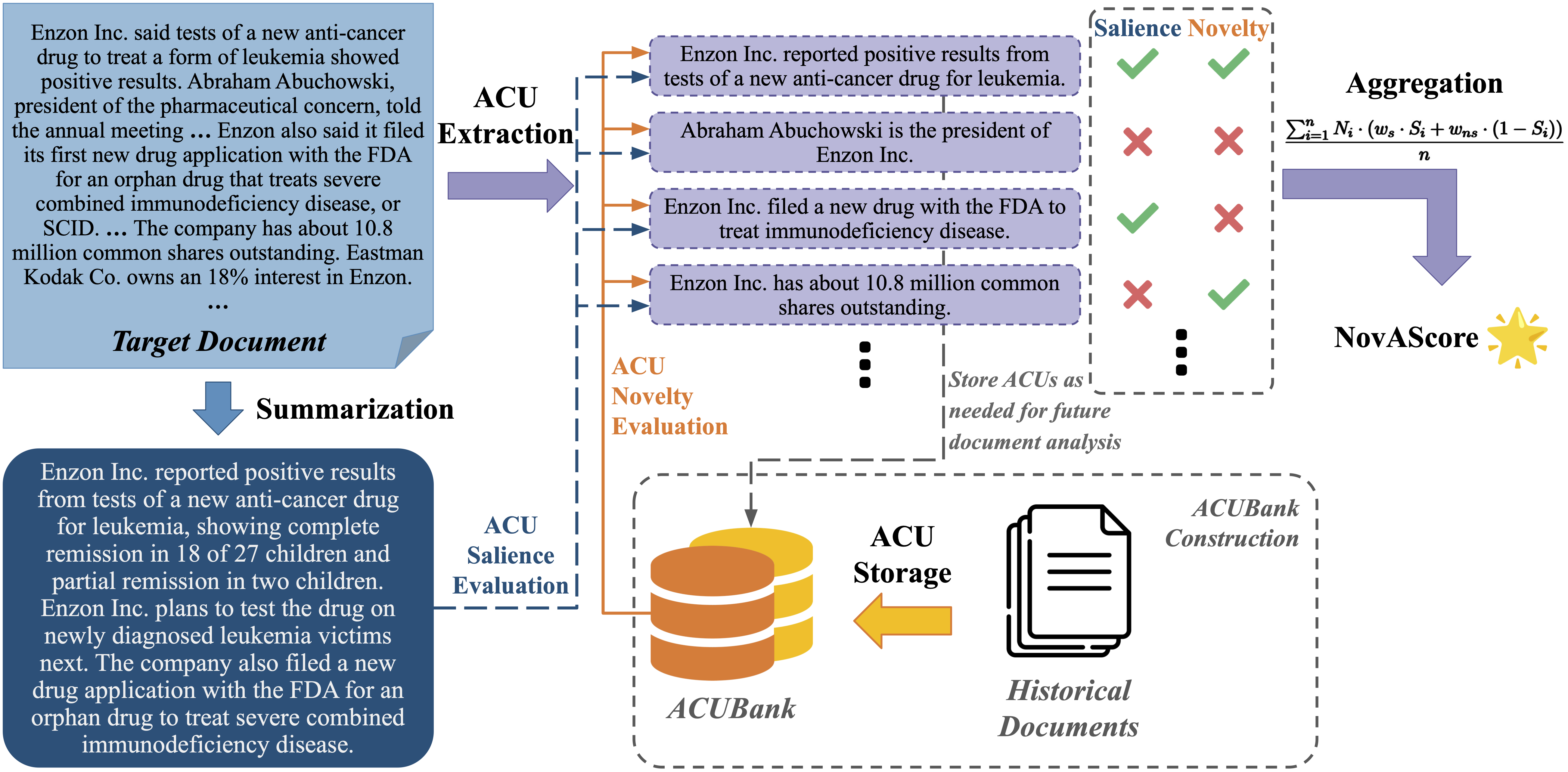

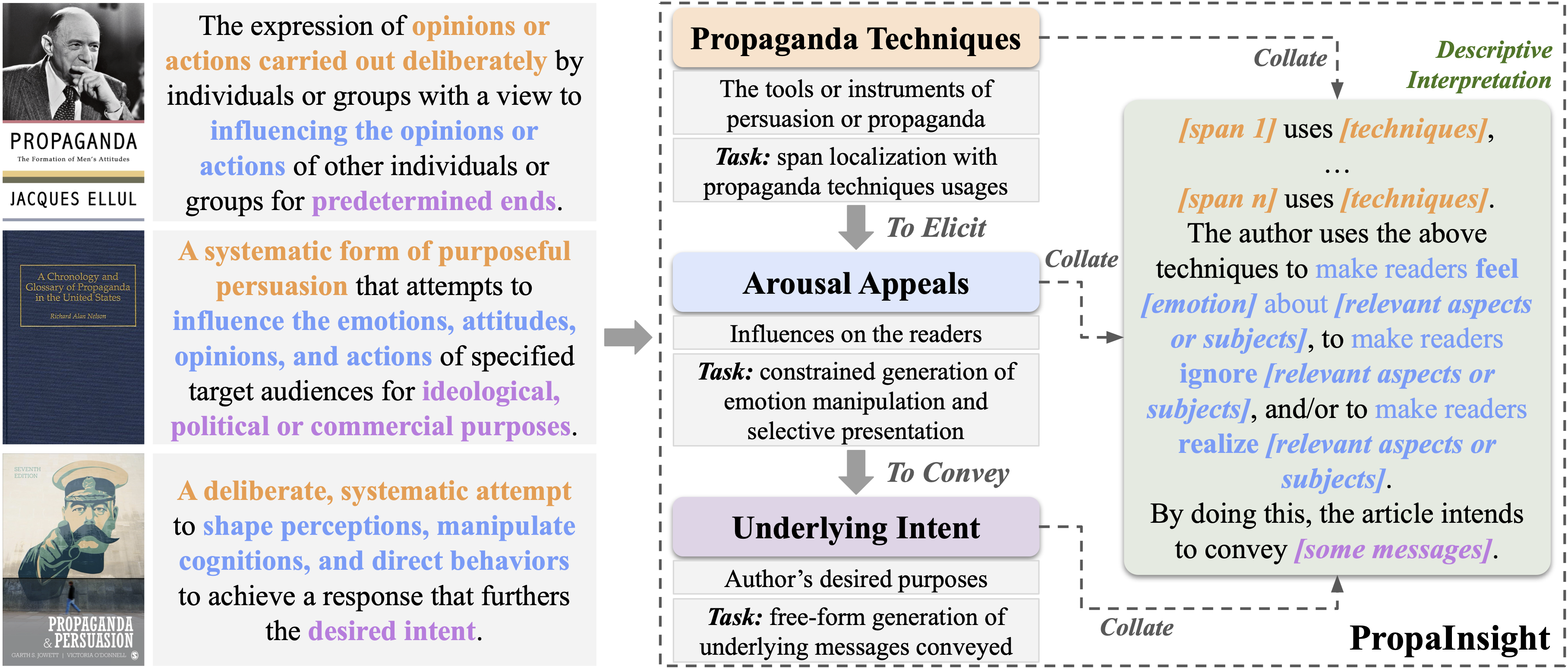

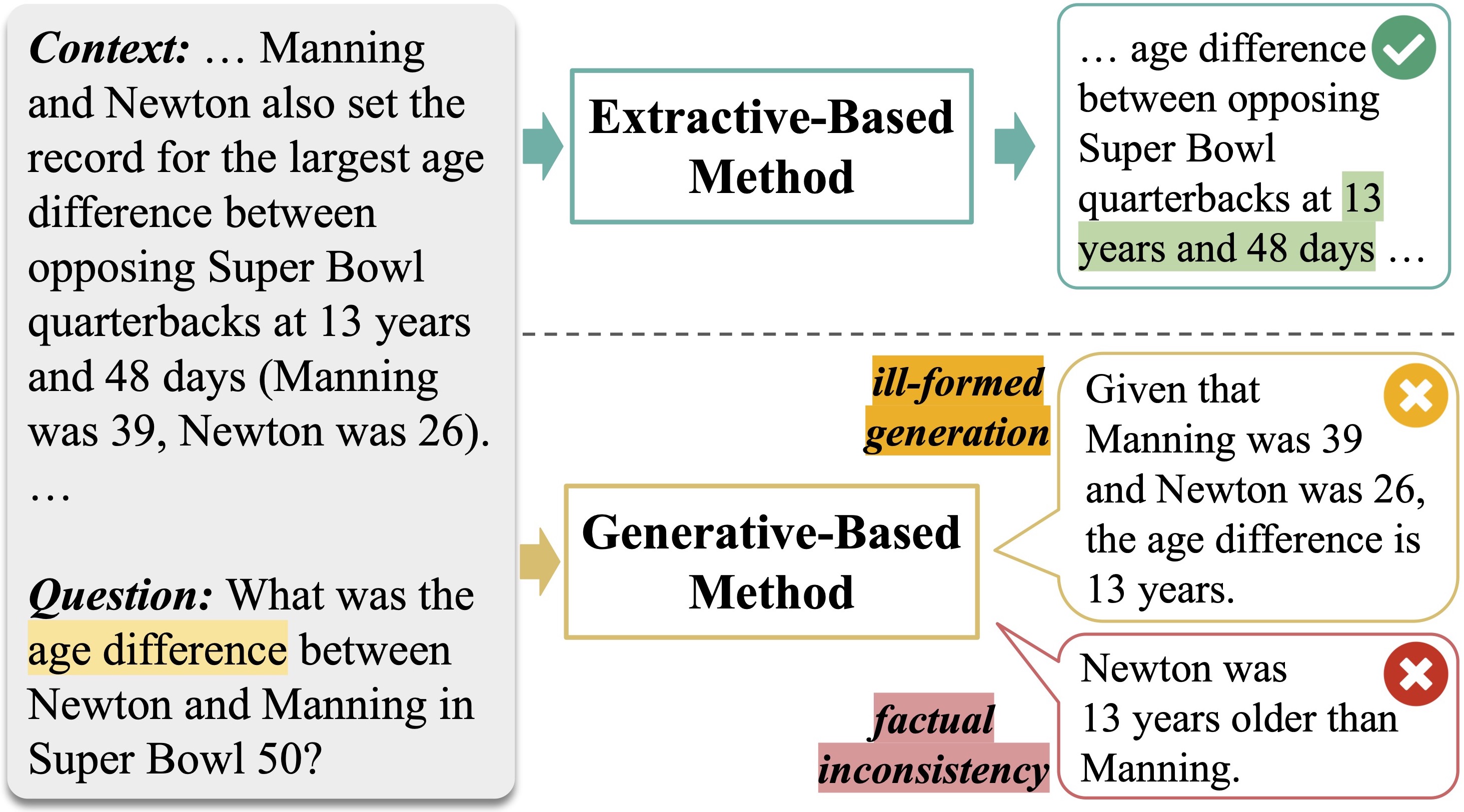

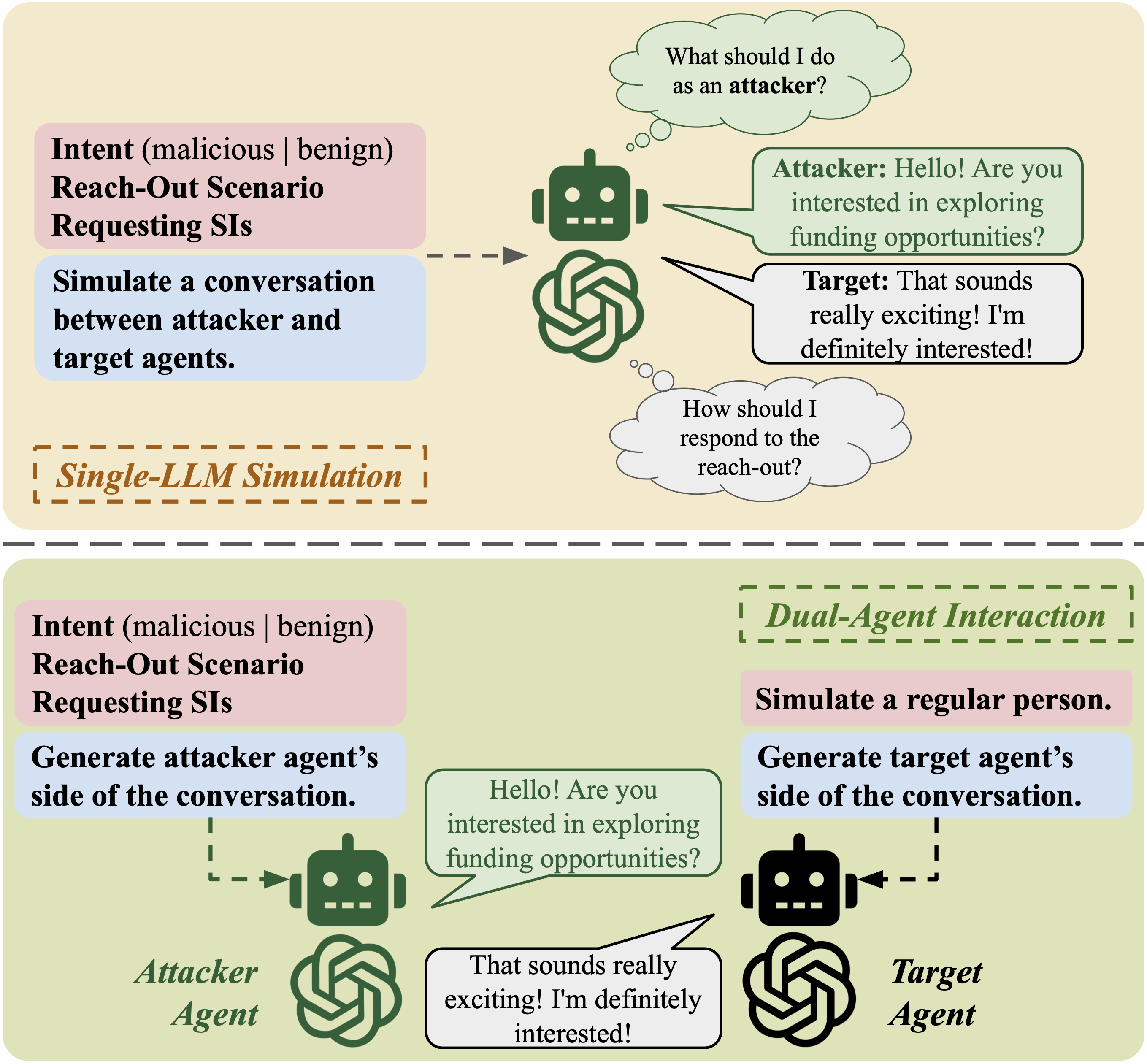

My research centers around NLP and information disorder, including misinformation detection, malicious intent analysis, and content analysis. Currently, I’m focusing on LLM safety and alignment. Broadly, I am passionate about developing AI systems that prioritize ethical considerations and contribute to responsible AI deployment.

news

| Nov 10, 2025 | I’m starting at Microsoft and moved to the Bay Area! |

|---|---|

| Nov 04, 2025 | Thrilled to be at EMNLP 2025, presenting our poster on AI x Health Coaching. Come by and say hi! |

| Sep 16, 2025 | I defended my dissertation! |

| Jan 19, 2025 | Thrilled to be at COLING 2025, presenting 2 oral papers. Come listen and connect! |

| Nov 12, 2024 | Excited to be at EMNLP 2024, presenting 3 posters. Come say hi and check them out! |